Research

Overview

Silicon Neuronal Networks: a

neuromimetic system

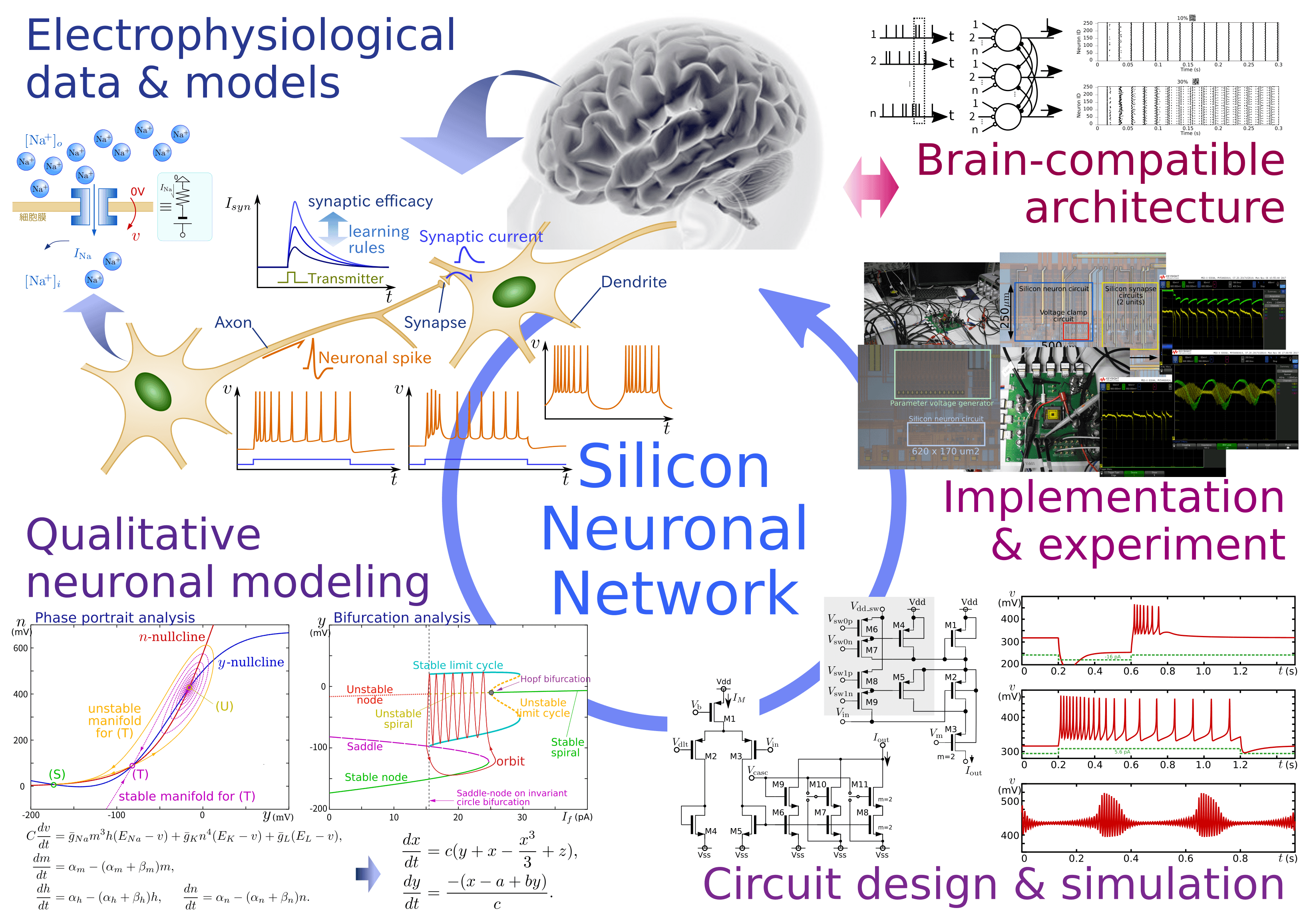

The lab is working on Silicon Neuronal Network (SNN) as a fundamental technology for “brain-compatible” computing system that processes information on the same principle as the brain. It is an artificial neuronal-cells’-network made of electronic circuit that reproduces the electrophysiological activities in the nervous system.

This neuromimetic system approach tries to reconstruct the brain’s information processing scheme on electronic circuit chips. It will be a common technology not only for next-generation AI capable of processing massive data streams in a robust, instantaneous, autonomous, and intelligent manner but also for the information processing system foundation in the future when evolution in the brain-machine interface (BMI) makes the border between the brain and machines ambiguous. Our final goal is to build a celebration machine on the SNN platform.

In this bottom-up approach from the neuronal cell level to the high-order brain function level, we focus on two big questions. The first is on the hardware implementation: how to design the silicon neurons and synapses as power-efficient and large-scale integration capable as their biological counterparts? The second is rather theoretical: how the neuronal spikes code the information and brain microcircuits process the information based on them? From these two questions of focus, the lab has two major topics, analog and digital SNNs. Nonlinear mathematics is our major tool in addressing these multidisciplinary topics.

Analog SNNs

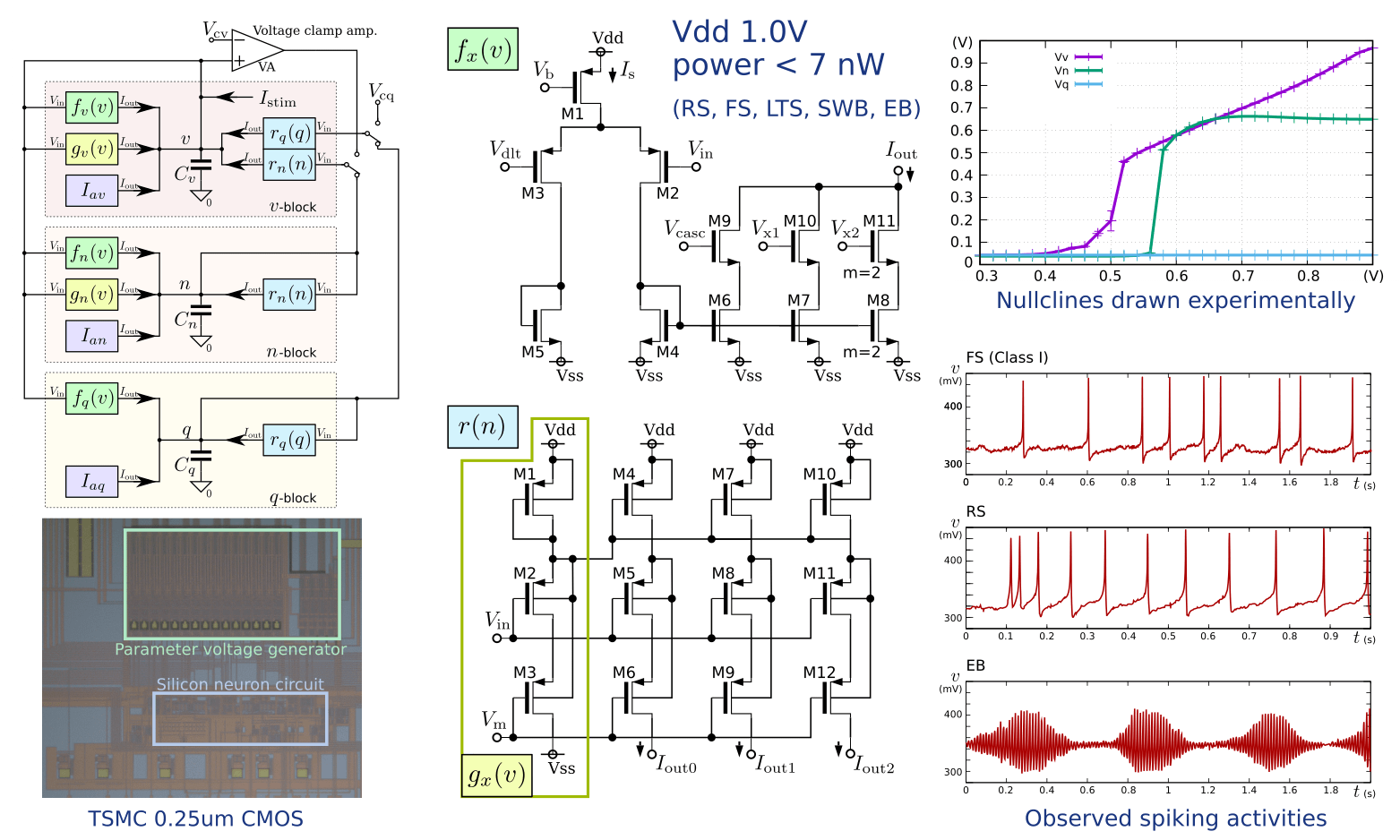

In analog circuits, differential equations of the neuronal models are solved by exploiting physical characteristics of the electronic components. For example, integration is performed directly by current integration in the capacitors and sigmoidal characteristics in the ionic channel models can be simulated by differential pair circuits. Compared to numerical simulation based implementation including digital circuit implementation, far small number of transistors is required. Availability of ultralow-current devices such as the metal oxide semiconductor (MOS) transistors in their subthreshold domain is a most strong advantage. Analog silicon neuron circuits built using them consume ultralow-power about 200 pW to 10 nW.

A factor to be noted is the physical noise in the electronic devices. From the viewpoint of neuromimetic systems this is not necessarily a drawback, because the information processing in the nervous system is thought to be utilizing the physical noise in the ionic channels and other physical processes. A most serious drawback of analog circuit is the susceptibility to the variability in transistors’ characteristics, which is particularly severe in the subthreshold domain. It originates from the fabrication process and amplified by making the transistor size smaller.

We developed a new design approach for SNN circuits that utilize the techniques of nonlinear mathematics to solve this problem (qualitative-modeling-based approach). Most recent generations of silicon neuron circuits in the lab is capable of simulating six important neuronal cell classes including Regular Spiking, Fast Spiking, and Elliptic bursting with less than 7 nW. It is equipped with the parameter voltage inputs that are used for configuring its neuron class and more detailed characteristics.

Current projects for analog implementation include improving integration of synaptic circuits, automatic parameter voltage tuning algorithm, and integration of novel ultralow-power analog memory devices in the SNN chip.

Digital SNNs

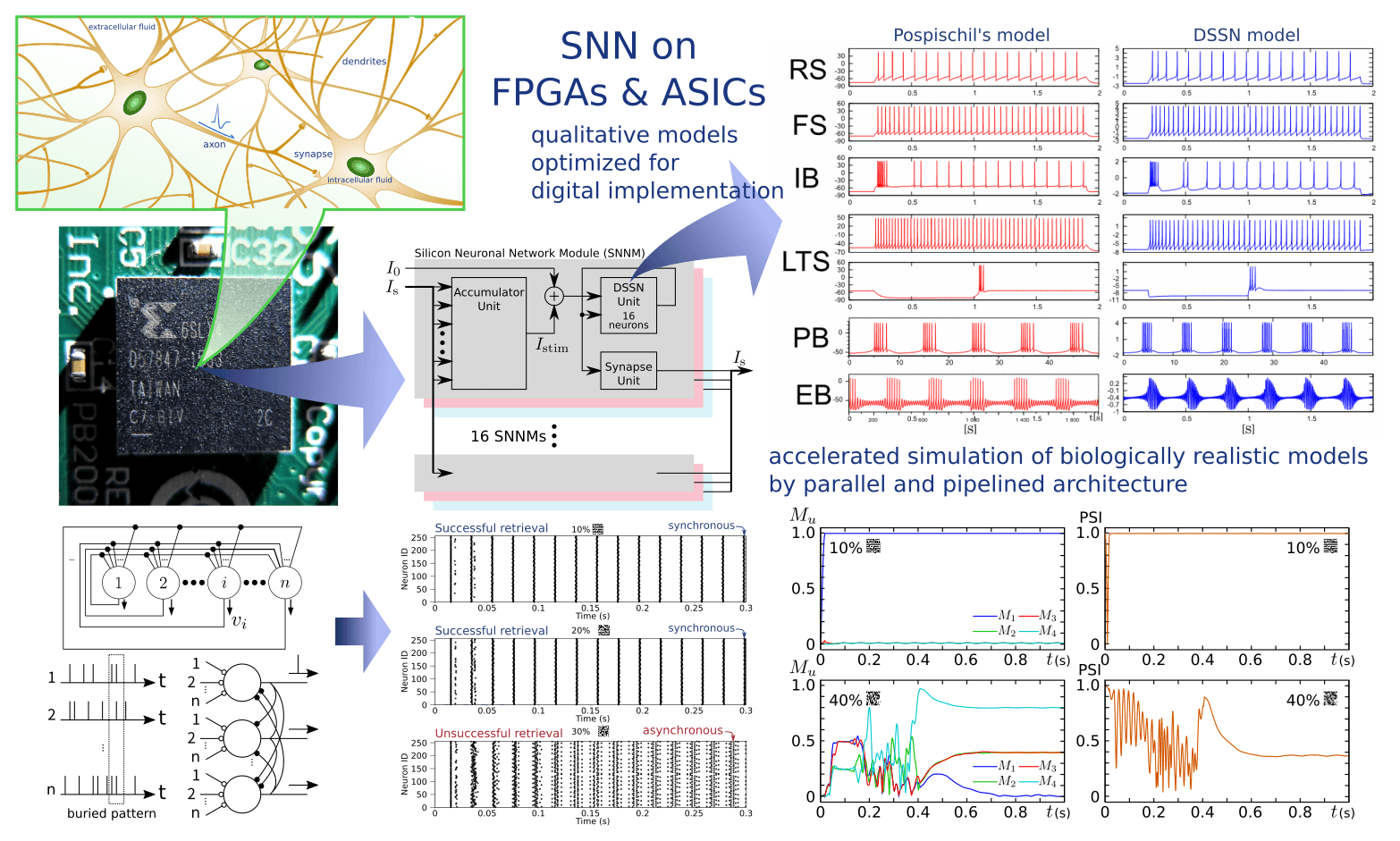

A traditional strategy to make circuits less susceptible to the transistors’ variability is to regard them as devices with only two states, 0 and 1. The digital circuit enabled large-scale integrated circuits (LSI), which forms the physical foundation of current finite-state-machine-based information processing systems. The LSI technology is constantly evolving with investment of a huge amount of financial and human resources. In these days, it is getting more optimized for massively parallel and low-power computing. For example, this accelerates evolution in general purpose graphics processing unit which provides massively parallel computing environment applicable also to the deep neural networks. More power-efficient chips dedicated to SNNs were developed by IBM and Intel, which comprise about one million neurons and consume less than 100 mW. It is far higher than the value expected for analog chips with the same scale, but very low compared with the software simulation. From the viewpoint of large-scale integration with acceptable power, digital implementation of SNNs has a great advantage.

Field-programmable gate arrays (FPGAs) are digital circuit chips whose logic is programmable by users. They are less power efficient for about an order of magnitude, but their flexibility and low cost are attractive to the researchers on digital SNNs. Another drawback of using FPGAs is limited amount of integrated memory. This is a bottleneck in large-scale integration because the number of synapses is generally more than three orders of magnitude larger than that of neurons and the synapses have to hold their synaptic efficacy.

We developed a SNN platform for single FPGA chip. The neuron model is optimized for FPGA implementation and supports 8 neuronal classes including regular spiking and low threshold spiking. The synaptic model supports 4 types of chemical synapses, NMDA, AMPA, GABAA, and GABAB. The maximum scale of the network is currently about 2000 neurons, which depends on the amount of on-chip memory. On this platform, auto-associative memory on all-to-all connected network and spatio-temporal pattern recognition on single-layer network with lateral inhibition were constructed and analyzed. It was shown that auto-associative memory with spiking neuronal networks outputs the retrieved pattern and its confidence at the same time.

Current projects for digital implementation include improving our digital SNN models, general spike information transmission bus between digital SNN chips, analog SNN chips, PCs, and other apparatus that handle spikes, and elucidating the information processing principles in the brain microcircuits using our SNN platform.